Memory Systems in AI Agents: Episodic vs. Semantic

AI Agent Mastery Newsletter - Section 1: Foundations

Picture this: Your recommendation agent suddenly starts suggesting winter coats to users in July, despite months of learning seasonal patterns. The culprit? A memory system that couldn't distinguish between what happened (episodic events) and what it learned (semantic knowledge). This isn't just a theoretical problem—it's the kind of production issue that costs companies millions in lost conversions and user trust.

The Memory Architecture That Shapes Intelligence

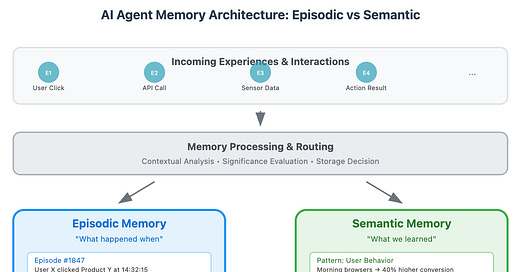

At the heart of every sophisticated AI agent lies a memory system that determines not just what information persists, but how that information influences future decisions. The distinction between episodic and semantic memory isn't merely academic—it's the difference between an agent that adapts intelligently and one that gets trapped in brittle patterns.

Episodic memory captures the "what happened when" of an agent's experience. Think of it as a detailed journal of specific interactions: user X clicked product Y at timestamp Z, resulting in outcome W. This memory type preserves the contextual richness of individual events, maintaining the temporal sequence and environmental conditions that surrounded each decision.

Semantic memory, by contrast, distills patterns and relationships from these experiences into generalized knowledge. It's the difference between remembering "user X bought running shoes on Monday" versus learning "users who browse athletic wear in the morning are 40% more likely to purchase within 24 hours."

The Hidden Complexity of Memory Integration

The most intriguing aspect of agent memory systems lies not in either type alone, but in their interaction. Tesla's Autopilot exemplifies this beautifully. The system maintains episodic records of specific driving scenarios—that particular intersection where visibility was poor, the exact weather conditions during a near-miss event. Simultaneously, it builds semantic knowledge about general driving patterns: how to handle four-way stops, when to be more cautious around school zones.

The genius emerges when these memory types work in concert. When the vehicle encounters a similar intersection, episodic memory provides specific contextual details while semantic memory supplies generalized rules. This dual-memory approach enables the agent to be both adaptive to novel situations and consistent in its fundamental behaviors.

Google's recommendation algorithms demonstrate another compelling implementation. They maintain episodic traces of user interaction sequences—not just what you clicked, but the entire context of your browsing session. This episodic richness feeds into semantic models that learn broader patterns about content preferences, timing, and user intent.

Memory Decay and the Forgetting Paradox

Here's where most implementations stumble: the assumption that more memory always equals better performance. In reality, strategic forgetting proves just as crucial as remembering. Episodic memory systems that retain every interaction indefinitely become computationally unwieldy and can amplify outdated patterns.

The solution lies in sophisticated decay mechanisms. Recent episodic memories maintain high fidelity, while older ones compress into semantic summaries or fade entirely. This isn't random deletion—it's intelligent curation based on relevance, impact, and representativeness.

OpenAI's reinforcement learning agents employ a particularly elegant approach: they maintain detailed episodic records during active learning phases, but gradually compress these into semantic policy updates. The episodic memories that contributed most significantly to successful outcomes persist longer, while routine interactions fade more quickly.

The State Management Challenge

Consider the complexity of managing these dual memory systems in production. Each episodic entry requires storage, indexing, and retrieval mechanisms. Semantic knowledge needs updating mechanisms that don't catastrophically overwrite valuable learned patterns. The timing of when to consolidate episodic memories into semantic knowledge becomes a critical design decision.

The memory consolidation process itself presents fascinating trade-offs. Aggressive consolidation reduces storage requirements and improves inference speed, but may lose important contextual nuances. Conservative consolidation preserves detail but can lead to memory bloat and slower decision-making.

Practical Implementation Insights

The most successful production systems implement hierarchical memory architectures. Short-term episodic buffers capture immediate interactions with high fidelity. Medium-term episodic stores maintain contextually rich records for recent significant events. Long-term semantic knowledge bases distill patterns from historical data.

The key insight that separates robust systems from fragile ones lies in the boundary conditions. How does your agent handle memory conflicts when episodic evidence contradicts semantic knowledge? What happens when memory systems become unavailable due to infrastructure failures? These edge cases reveal the true sophistication of your memory architecture.

Building Your Memory-Aware Agent

Start with this simple but powerful framework: implement a dual-buffer system where recent interactions flow into an episodic store while background processes continuously extract semantic patterns. Here's the core structure:

class AgentMemory:

def __init__(self, episodic_capacity=10000, consolidation_threshold=1000):

self.episodic_buffer = CircularBuffer(episodic_capacity)

self.semantic_store = SemanticKnowledgeBase()

self.consolidation_threshold = consolidation_threshold

def record_experience(self, state, action, outcome, context):

# Store detailed episodic record

episode = EpisodicRecord(state, action, outcome, context, timestamp=now())

self.episodic_buffer.add(episode)

# Trigger consolidation if threshold reached

if self.episodic_buffer.count_since_consolidation() > self.consolidation_threshold:

self.consolidate_memories()

def consolidate_memories(self):

# Extract patterns from episodic memories

patterns = self.extract_semantic_patterns(self.episodic_buffer.get_recent())

self.semantic_store.update(patterns)

self.episodic_buffer.mark_consolidated()

The beauty of this approach lies in its configurability. Adjust consolidation_threshold to balance memory freshness against computational overhead. Experiment with different pattern extraction methods to optimize for your specific domain.

The Path Forward

Memory systems in AI agents represent one of the most underexplored areas with immediate practical impact. The agents that will dominate tomorrow's applications won't just be those with better algorithms—they'll be those with more sophisticated memory architectures that enable true learning from experience.

Your next production agent shouldn't just process information—it should remember, learn, and adapt. The difference between episodic and semantic memory isn't just a design choice; it's the foundation of intelligent behavior that scales.